|

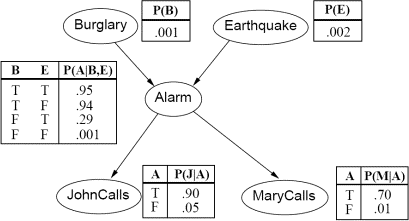

- Run Gibbs sampling 1000 times for 2000 steps starting each time from random intial values for the hidden variables (drawn from a uniform distribution) and verify that the conditional probability of burglary given that both John and Mary call is approximately 0.284. In order to estimate this conditional probability calculate the mean and the SEM (standard error of the mean) for the 1000 values of the binary random variable that is one if burglary = true and 0 otherwise (after 2000 steps).

- Suppose now that you learn that there was an earthquake in the area. Run Gibbs sampling to estimate the probability of a burglary (given that John and Mary both called, and that there was an earthquake). Interpret the result.

|

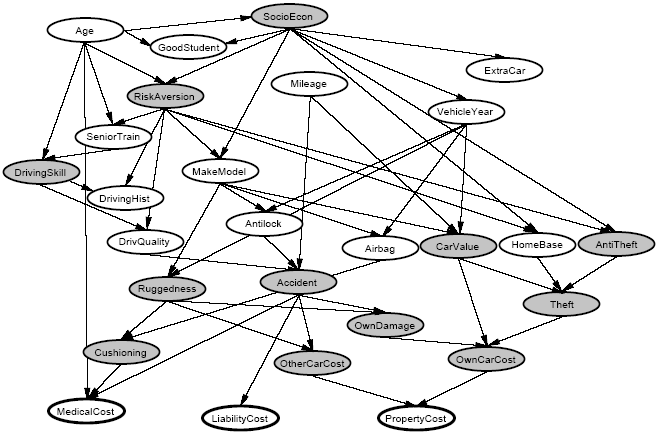

The network has 27 variables (usually the 12 shaded variables are considered hidden or unobservable, while the other 15 are observable) and over 1400 parameters. An insurance company would be interested in predicting the bottom three "cost" variables, given all or a subset of the other observable variables. The network has been provided in the file insurance.mat.

- You should estimate the cost of property damage, i.e. the probabilities of the values of the variable PropertyCost, for an adolescent driving a car (Age = Adolescent) with about 50,000 miles (Mileage = FiftyThou) and no anti-lock brakes (Antilock = False).

Run Gibbs sampling for a 1000 times for 1, 10, 100, and 1000 steps starting from random intial values for the hidden variables (drawn from a uniform distribution) and plot the dependence of the resulting probability distribution (obtained for the 1000 trials) on the number of steps. Because some of the factors of the joint probability distribution are 0 you have to make sure that the probability of the intial state of the random variables is larger than zero (if not choose another initial state).

How long ist the burn-in time (number of steps) that is required to sample from the stationary probability distribution?

- Try out at least one other query of your own invention and report the results. Comment on why your results did or did not make sense.

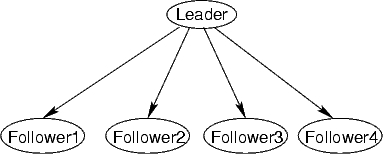

Each network in this family has a single "leader" variable, and some number of "follower" variables as shown in Fig. 8. The idea is that the leader selects a bit at random (0 or 1 with equal probability), and then all of the followers choose their own bits, each one copying the leader's bit with 90% probability, or choosing the opposite of the leader's bit with 10% probability. Leader-follower networks with ![]() followers have been provided in the files leader-follower-k.bn for

followers have been provided in the files leader-follower-k.bn for

![]() .

.

What is the marginal probability distribution for the leader variable, in the absence of any evidence (i.e., none of the other variables have been set to specified values)?

- What is the correct answer for the query given above? (This should be easy to answer.)

- Run Gibbs sampling on a network with 5 followers using the given query. Run for at least 1000000 steps, printing the partial results every 1000 steps. Make a plot of the estimated probability (as estimated by Gibbs sampling) of the leader choosing the bit 0 as a function of the number of steps. In other words, the x-axis gives the number of steps, and the y-axis gives the probability of the leader bit being 0 as estimated after t steps of Gibbs sampling. Does Gibbs sampling seem to converge to the right answer? If so, roughly how long does it seem to take?

- Now repeat the last question on networks with 10, 15 and 20 followers. Run for at least 1000000 steps, printing partial results at least every 1000 steps.

- Can you explain what you observed? Why does Gibbs sampling have such a hard time with these networks? What do these experiments say about the difficulty of detecting convergence?